Peabody College’s Office of Equity, Diversity and Inclusion sent an email to students on Feb. 16 about the Feb. 13 Michigan State University shooting where three students were fatally shot. The email urged solidarity and expressed sympathy, asking us to “come together as a community to reaffirm our commitment to caring for one another” and to “promote a culture of inclusivity on our campus” so “we can honor the victims of this tragedy.” The importance of these sentiments is overshadowed by how they were generated by ChatGPT.

Over the past few months, I have engaged in numerous discussions with professors and peers and read many op-eds lamenting ChatGPT’s potentially disastrous effects on education. The most commonly expressed fear is that students would use ChatGPT to avoid writing their own university work.

It is, therefore, highly ironic that Vanderbilt used ChatGPT to avoid writing statements to the community. It is especially disturbing that it relied on ChatGPT to craft what should have been a genuine, heartfelt expression of sympathy for the three dead and many injured students in Michigan. I write this article not to attack Vanderbilt or any specific person, but rather to reflect on the potential issues posed by artificial intelligence.

Vanderbilt’s Associate Dean for Equity, Diversity and Inclusion Nicole Joseph apologized on Feb. 17 for using ChatGPT to write the initial email, calling the decision “poor judgment.”

“As with all new technologies that affect higher education, this moment gives us all an opportunity to reflect on what we know and what we still must learn about AI,” Joseph said in her email.

This incident demonstrates the vast gulf between what the administration expects of students and the corners it’s willing to cut to further the appearance of community-building.

ChatGPT is problematic for many reasons, not least that it can be a form of mass plagiarism. But beyond that, writing as a process — so revered on college campuses that writing courses are oftentimes required of all students — means finding the most effective word or simile, the most persuasive argument and, above all, revising and re-thinking to promote growth as a writer and a critical thinker. When writing about very sensitive topics, the writing process itself can allow the author to work through difficult emotions, as it can with its recipients.

Ostensibly, the purpose of Peabody’s email was to help students deal with grief and fear, as well as come together to honor the victims of the shooting. With the knowledge that ChatGPT authored the sentiments in the email, it is hard to ignore the hypocrisy of our administration’s words.

“We must continue to engage in conversations about how we can do better, learn from our mistakes, and work together to build a stronger, more inclusive community,” the email reads.

As many students have highlighted, it is impossible to take this statement seriously when it clearly did not come from the heart. The idea that this email was part of an attempt to engage in “conversations” suggests a bleak irony if one side of the conversation is actually an AI bot. No matter how authentic ChatGPT’s words may sound, there is no heart from which any such emotion could emanate.

Vanderbilt’s unfortunately bungled foray into ChatGPT begs a further question: What are condolence emails after mass tragedies for? College students receive countless emails responding to tragedies from university administrators aiming to appear invested in their students’ mental health and well-being. At Vanderbilt, we regularly receive emails about anti-Asian violence, abortion access and other tragedies in our local and national community. In these emails, the university usually outlines actions it plans to take to help students grieve or move forward. If the recent Peabody EDI Office message shows that so little actual thought goes into crafting these emails, we must logically question if these promised plans will ever be realized.

That a university official felt the need to consult ChatGPT also speaks to an institutional drive to market the message correctly, to avoid any backlash, no matter how minuscule. When responding to a tragedy, the impulse should be to comfort, build community, and speak from the heart, not to send a reproducible, inherently unfeeling PR response.

I acknowledge how difficult it must be to address tragedies and to think of the right words to bring comfort to a huge and diverse community. I don’t believe the individuals responsible for sending the email meant to do anything other than bring comfort. But that a university official felt the need to consult ChatGPT about this topic speaks to an institutional drive to market the message correctly and to avoid any backlash, no matter how minuscule. When responding to a tragedy, the impulse should be to comfort, build community and be personal, not to send a reproducible, inherently unfeeling PR response.

Equally as concerning as the lack of care displayed in the university’s email is the lack of accuracy in detailing the events of the shooting. The Hustler pointed out factual inaccuracies in Vanderbilt’s ChatGPT-generated message. For example, the email referred to “recent Michigan shootings,” when there was in fact only one. This error logically demands another question: Did the university’s administrators even read this email before it went out? Did they care at all what it said? Do they care about students’ grief and fear, or are they merely paying lip service to protect their image?

This incident demonstrates the vast gulf between what the administration expects of students and the corners it’s willing to cut to further the appearance of community-building. How are students expected to take Vanderbilt’s guidelines surrounding plagiarism seriously when our very own leaders employ these tools to produce authentic-sounding messages of care?

Institutional hypocrisy aside, how should we process the insincerity of their feelings?

This story was originally published on The Vanderbilt Hustler on February 26, 2023.

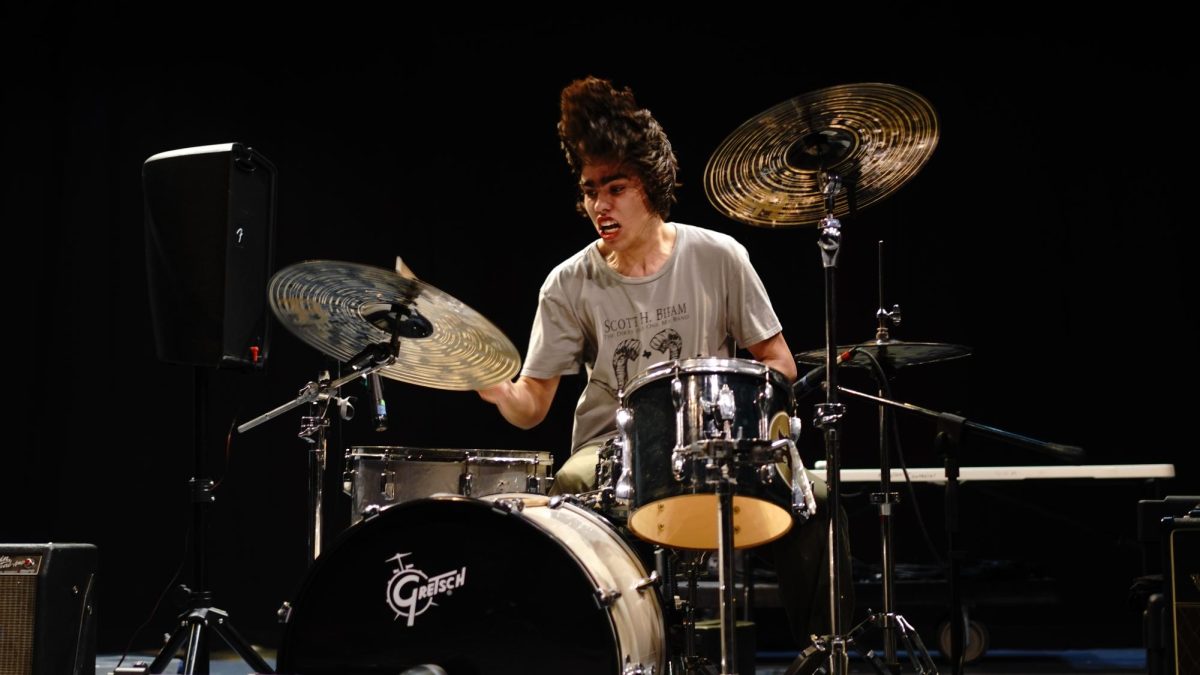

![IN THE SPOTLIGHT: Junior Zalie Mann performs “I Love to Cry at Weddings,” an ensemble piece from the fall musical Sweet Charity, to prospective students during the Fine Arts Showcase on Wednesday, Nov. 8. The showcase is a compilation of performances and demonstrations from each fine arts strand offered at McCallum. This show is put on so that prospective students can see if they are interested in joining an academy or major.

Sweet Charity originally ran the weekends of Sept. 28 and Oct. 8, but made a comeback for the Fine Arts Showcase.

“[Being at the front in the spotlight] is my favorite part of the whole dance, so I was super happy to be on stage performing and smiling at the audience,” Mann said.

Mann performed in both the musical theatre performance and dance excerpt “Ethereal,” a contemporary piece choreographed by the new dance director Terrance Carson, in the showcase. With also being a dance ambassador, Mann got to talk about what MAC dance is, her experience and answer any questions the aspiring arts majors and their parents may have.

Caption by Maya Tackett.](https://bestofsno.com/wp-content/uploads/2024/02/53321803427_47cd17fe70_o-1-1200x800.jpg)

![SPREADING THE JOY: Sophomore Chim Becker poses with sophomores Cozbi Sims and Lou Davidson while manning a table at the Hispanic Heritage treat day during lunch of Sept 28. Becker is a part of the students of color alliance, who put together the activity to raise money for their club.

“It [the stand] was really fun because McCallum has a lot of latino kids,” Becker said. “And I think it was nice that I could share the stuff that I usually just have at home with people who have never tried it before.”

Becker recognizes the importance of celebrating Hispanic heritage at Mac.

“I think its important to celebrate,” Becker said. “Because our culture is awesome and super cool, and everybody should be able to learn about other cultures of the world.”

Caption by JoJo Barnard.](https://bestofsno.com/wp-content/uploads/2024/01/53221601352_4127a81c41_o-1200x675.jpg)