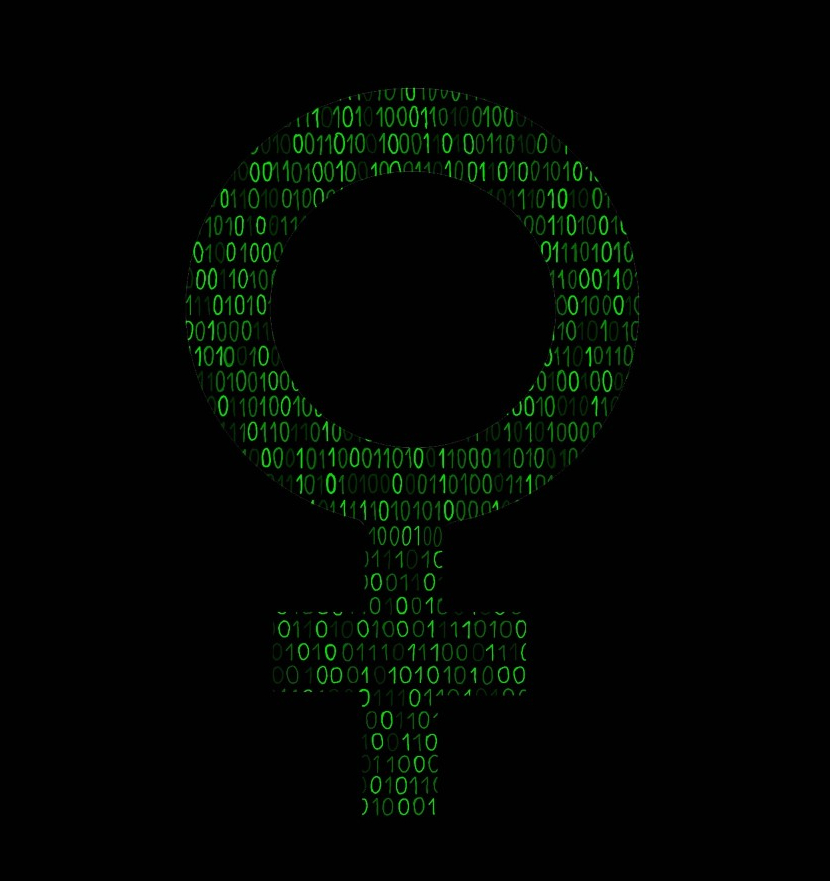

As Women’s History Month moves along, so does the development and the expanding influence of artificial intelligence technology. From ChatGPT to search engines, the world has become increasingly reliant, and equally fascinated, with AI. Yet, gender bias from developers and internet sources seeps into responses, potentially setting up a future riddled with a new-found basis for sexism.

The world of AI technology is dominated by men, with women accounting for only 22% of all AI jobs globally. People of color, no matter what gender, and the LGBTQ+ community have even less representation. This makes straight, white men the vast majority of workers in all technological jobs, including AI development. However, software development used to be a predominantly female workforce, with women making up 70% of Silicon Valley programming positions as recently as 1980. Since then, the lack of venture capital funding for female founders, including all funding in technology, has plummeted female participation and equality in the field. The minds developing many AI tools lack diversity, thus leading to a heightened possibility of biased responses.

This homogeneity has been shown to directly affect the output of AI technology that has already been used. In 2017, Amazon terminated an AI-powered recruiting engine that would rate job applicants on a five-star system because it would prefer men over women. The engine would study the past 10 years’ worth of applications, and use that to guide its recommendation for the top applicant. Because the applications it studied mostly belonged to men, it would favor resumes that appeared to belong to a man, feeding into its own gender bias.

Furthermore, AI language models like the popular ChatGPT take information straight from the internet and create responses to prompts from that material. Because it cannot always decipher true from false, it can create responses that regurgitate sexist beliefs and stereotypes. While OpenAI has policies that prohibit the usage of ChatGPT for hateful or political content, its access to potentially unreliable sources still poses a strong risk for untruthful responses. This creates dangerous grounds for the possible promotion of sexism in a period where both misinformation and disinformation are incredibly common. If millions of people use this technology every day, it could mean that generations of work to break gender stereotypes could be erased.

For example, when asked to create performance reviews for different jobs, ChatGPT used “she” when describing a kindergarten teacher, receptionist, nurse and designer. It used “he” when describing an engineer, investment banker, mechanic and construction worker. On top of this, it wrote 15% more feedback for employees it described using “she.” This bias is especially frightening when considering that ChatGPT crossed the threshold of 100 million users in Jan. 2023, breaking the previous record for the fastest-growing consumer application in a short amount of time.

There is no singular answer to all of these problems. Still, something must be done to protect the progress that gender equality has made. For starters, implementing educational programs that teach awareness of AI and its likelihood to spew false and biased information could lessen the impact that these responses have on society. Workshops at community centers that show consumers how to use AI while recognizing bias and misinformation could help people utilize the positive aspects of AI, but prevent regression in parity and acceptance. An increase in global promotion and encouragement for women, people of color and LGBTQ+ people to go into technology could create a new future for not only the positive development of AI but also equality as a whole. Within AI companies, there should be more employee training against all kinds of biases and transparency between developers and consumers. This way, the world is working together in the fight for a promising future for everyone.

While these ideas would simply start to address the problem of bias, something must be done to stop AI from promoting harmful stereotypes and false information. Without equality and truth, society cannot ethically or effectively function.

This story was originally published on The Statesman on March 16, 2023.