For the past year, many teachers nationwide have been fighting the use of AI in classrooms. Now, the school district is turning the tables.

In August, USD 497 purchased software called Gaggle that advertises itself as a suicide and self-harm prevention measure. It uses artificial intelligence to comb through massive amounts of district data and detect when students or staff are writing about self-harm or using other threatening language. On Nov. 13, district administrators will be meeting for a training session at district offices to review procedures for implementing Gaggle. Shortly after that meeting, the program will go live in USD 497.

School board member GR Gordon-Ross, who works as a software developer, says Gaggle will help the district comply with the Children’s Internet Protection Act.

“We have several obligations to protect the children within our district,” Gordon-Ross said. “And one of those very specific obligations is to be mindful and to watch for signs of bullying, self harm, anything else that falls under those two categories. And this particular software does that exactly.”

Despite the hefty initial price tag of $162,000 for three years, only about $80,000 will come out of the district’s capital outlay fund. The other half is being matched by a Safe and Secure Schools grant.

Gaggle only monitors data that is within the district’s Google Workspace for Education. So whenever a student is signed into their school-provided Google account, their data will be accessible. Gordon-Ross pointed out that there is very little expectation of privacy when using district devices.

“If you’re going to be using an account provided by the district, on the district device, using district resources that operate on the district’s internal network, your expectation of privacy should be much lower than if your using your own account on Gmail, or Facebook, or Snapchat, or your own device,” Gordon-Ross said. “The level of privacy expectation should be very different.”

USD 497 Director of Technology David Vignery stressed that the district is not surveilling students.

“That can be looked at as spying, but really it is not, because we own it,” Vignery said. “We’re not looking into phones, we’re not looking into a personal device that people walk on campus with, we don’t do any of that because it’s not possible for us to do.”

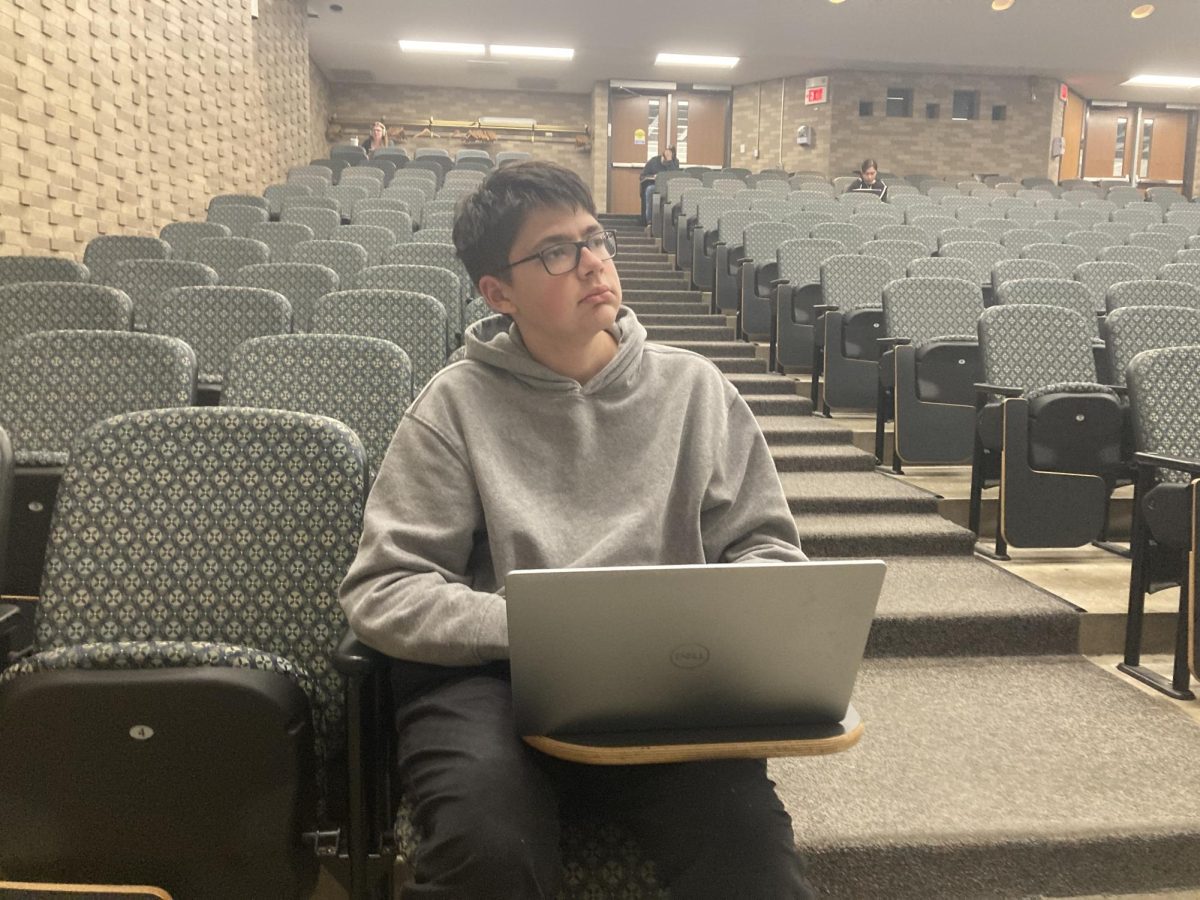

LHS senior Brendan Symons said that despite the legality of collecting the data, it isn’t necessarily a good idea.

“I think that we should draw lines,” Symons said. “I think the school board should be willing to draw a line in the sand on what they are and aren’t willing to do. And maybe consider the fact that even if its legal, that doesn’t mean its always the right move. The spirit of it is good, but it could have unintended consequences.”

Vignery explained that Gaggle only flags content when it picks up a keyword. When that happens, then a real Gaggle employee will review the context of the flagged material. If they decide that it’s a credible threat, they will contact the district and the material will be sent to the administrative level.

“Each building is going to have point people,” Vignery said. “They’re going to be administrators, they’re going to be going to counselors. Most of it will probably hit the administrators first and they will make the decision.”

This new technology comes in the context of AI growing in popularity in education and beyond. Isaiah Hite, one of LHS’ building support technicians, says that he expects AI to only increase in popularity.

“I expect AI to be huge in education,” Hite said. “It seems like the growth is exponential and in the past when we’ve seen stuff like that, it has become a huge part of our life. I don’t know what that looks like, and that’s kind of terrifying.”

In the face of this growth, Hite listed a couple ways to continue to be diligent in protecting students in the AI age.

“You do really have to look into the company that you’re working with and the way that they handle your data,” Hite said. “If they’re encrypting it, and they only see it if our end picks something up, then maybe we’re really safe. What you have to look for is how often they’ve been breached, are they certified with a third-party.”

Gordon-Ross agreed that there are ways to be proactive, but said that nothing is foolproof.

“In the end, working with tracking companies, they can say all those things,” Gordon-Ross said. “In the end you have to trust them that they’re going to do all the things that they say they’re going to do.”

Everyone who the Budget interviewed agreed that there are risks associated with using Gaggle. But Vignery insisted that Gaggle is a piece of software that can save lives. Gaggle was founded on the basis of preventing suicide, and it is using tools that are of today’s world to do so.

“We have so many hurting kids, and adults, in this world, that if we can use this product to help understand the child, and understand their difficulties that they’re going through, and keep them safe from harming themselves or committing suicide, one time, then we have to use that program,” Vignery said. “That’s the way I look at it.”

Gordon-Ross agreed. He offered a response to anyone who is concerned about spying and privacy.

“I certainly understand where those people are coming from,” Gordon-Ross said. “I respect the question, and I respect the concern, but if we miss one kid who was in distress that we could have caught…”

This story was originally published on The Budget on November 10, 2023.