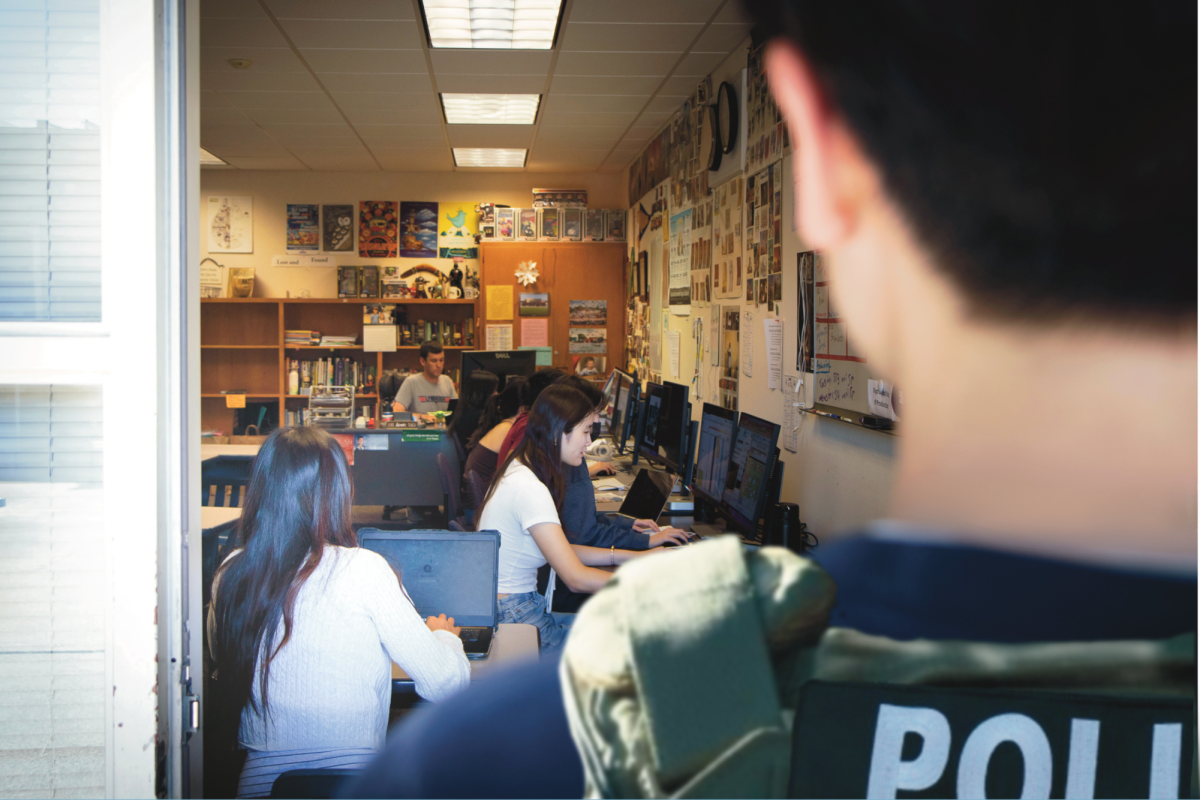

As junior Opal Morris was brought to the office by a security guard, she had no idea why she had been called in. Upon entering, she saw a row of her fellow portfolio photography students already sitting down.

Turns out, Morris and her classmates were called down after their photography was flagged by Gaggle for nudity.

Days after the new Gaggle software was launched in USD 497, almost an entire class of portfolio and AP photography students were called to the office for flags on their photography assignments. Gaggle was launched in November, with the intent to prevent suicide and self harm via AI monitoring of students’ Google accounts.

To have administrators reach out to a student, a file in their Google account must be in what Gaggle calls “red zone,” whether it be a photo, document or video. For photography students, photos for various projects were flagged for what was deemed “nudity.”

Yet all students involved maintain that none of their photos had nudity in them. Some were even able to determine which images were deleted by comparing backup storage systems to what remained on their school accounts. Still, the photos were deleted from school accounts, so there is no way to verify what Gaggle detected. Even school administrators can’t see the images it flags.

“I had to explain in detail every single photo,” Morris said. “It’s definitely made me conscious of what I’m creating and what issues it could provoke with administration, which I don’t think should be an issue that we have to worry about. Especially when we’re creating it for ourselves, portfolios and colleges.”

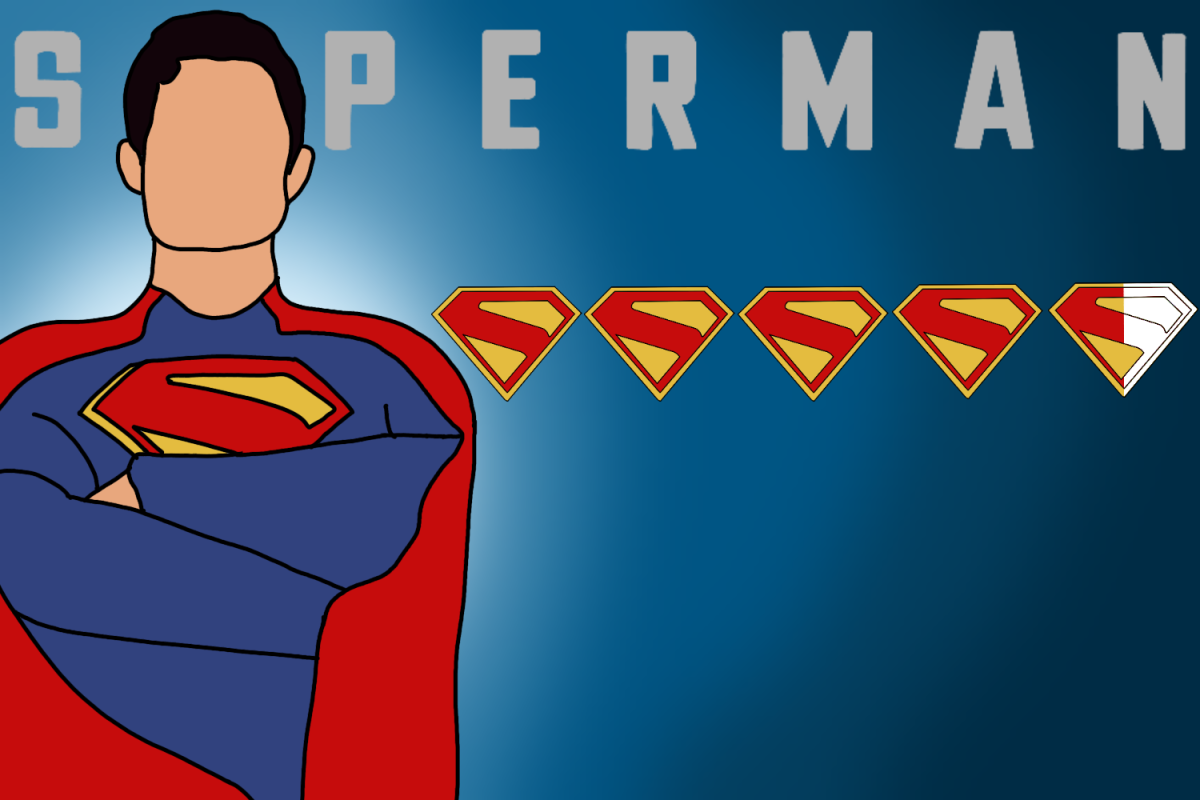

The AI-powered Gaggle sifts through student work on the Google Suite of products, including Google Docs, Drive and Gmail, looking for material it has been programmed to identify. After being flagged, Gaggle employees review the content and determine if schools should be notified. When it comes to images noted to be concerning, they are deleted.

“I wish we would have had more of a warning,” photography teacher Angelia Perkins said. “What is going into someone else’s work where they are supposed to have a right to be able to voice something, I think is a little scary.”

For a file to be marked red like the student’s photos, Gaggle says it is supposed to contain “pornography that appears to include a minor or imminent plan of inappropriate sexual activity.”

“All the kids who do portraits were flagged and went to the office. It’s so interesting,” junior photography student Henry Farthing said. “In my stuff, I don’t see it getting flagged as something, let alone something sexual. I know someone who solely does landscape stuff who was brought in.”

Because the flagged items are deleted from servers, students feel as though they are actively being censored. While the Supreme Court has allowed schools to censor some students’ speech, it is limited to instances when the speech is disruptive to the school environment, obscene or invades the rights of others. Many of the images that have been flagged already would not even violate the school’s permissive dress code, let alone be considered obscene.

“I’ve always been very uncensored of what I wanted to create, and Perkins has her ground rules, but we have creative freedom,” Morris said. “Afterwards, it’s definitely made me conscious of what I’m creating and what issues it could provoke with administration.”

While the district has noted that it has the right and responsibility to monitor activity on student devices and student accounts under its umbrella, students still retain ownership of their work.

“Unless the work is done by a salary (‘work for hire’) or under a contract or an employee handbook that specifies ownership, the normal rule is that the creator owns the work. And that is true even if school equipment is used,” according to the Student Press Law Center.

Besides students’ work being deleted from their drives if flagged, many student artists now feel like they are under an AI surveillance state, with Gaggle’s watchful and inconsistent eyes deciding what could potentially flag them as an artist.

“When the district [buys] this, it makes people a little bit afraid, kind of like Big Brother is watching. I think there’s a little bit better ways to deal with that,” Perkins said.

On top of the seemingly obvious issues, studies have shown that this type of AI discriminates against people of color, whether that means distorting how they are shown in generative images or “stereotype or censor Black history and culture,” according to the New York Times.

“Students of color and LGBTQ+ students, are far more likely to be flagged. Research has shown that language processing algorithms are less successful at analyzing language of people of color, especially African American dialects,” three senators said in a letter to Gaggle CEO Jeff Patterson. “This increases the likelihood that Black students and other students of color will be inappropriately flagged for dangerous activity.”

While AI like that which Gaggle uses can’t recognize skin color properly, it is what many artists have called “sensitive” to photos showing skin in general. In Morris’ case, two female students’ shoulders appeared in her flagged photographs.

“The fact that the body is viewed as obscene in the first place hints at the idea that the human body has been made to be something strictly sexual,” The Horizon reporter Jasmine Wong said.

For young, impressionable students, seeing from both Gaggle and society that a shoulder is enough to be flagged red sends mixed messaging to these artists.

“For this specific project, it was centered on the feminine gaze and the sexualization of the female body,” Morris said. “Then it’s funny that it got Gaggled for like nudity and pornography.”

While Gaggle is a present and ever evolving problem for the arts department, Perkins believes that being flagged and called to the office provides students a platform to get to talk about their work.

“This is a perfect time to use it as a platform to talk to people,” Perkins said. “We don’t just do stuff just to shock people. Everything is something they’re dealing with through art.”

Looking to the future, artists among the National Art Education Association are trying to stay ahead of companies like Gaggle, but they mainly focus on generative AI; not reactive AI.

“It is important to note that even when such efforts do not actually suppress particular types of expression,” the NAEA AI statement said, “they cast a shadow of fear for those who seek to avoid controversy. The arts cannot thrive in such a climate of fear.”

LHS students and staff are not anticipating changing their creative expression, and feel ready to face whatever Gaggle decides to Gaggle.

“Obviously, Opal and I aren’t going to change our work for a thing called Gaggle,” Farthing said. “They’re going to have to figure out how to adapt to a program like ours.”

This story was originally published on The Budget on February 9, 2024.