Introverts texting their fictional crushes. Influencers with artificial bodies and voices. Deepfaked political figures teaming up to play video games. Lawyers presenting AI’s words instead of their own. These scenes, which may have once appeared in science fiction novels, are now reality.

Nov. 30, 2022, research nonprofit OpenAI launched ChatGPT. Within five days, ChatGPT’s service had a million users. Reuters, a financial news agency, projected an estimated net profit of $200 million for OpenAI in 2023. As of Jan. 1, 2024, ChatGPT has made OpenAI $1.6 billion in revenue.

ChatGPT’s explosive debut sparked a media frenzy. The model was technically advanced, with an accessible interface, launching AI into the mainstream. Tech progressives embraced the program for daily use while skeptics criticized a lack of regulation — some feared the end of humanity itself.

While many may believe that AI is new, early stages of this technology have existed for decades, whether in graphing calculators or TikTok algorithms. However, truly self-aware AI does not yet exist — what the public often labels as AI is actually a pool of programs that can perform autonomously to some degree but lack an independent consciousness.

Still, the technology is advancing at a staggering rate, with more fields of work and study taking notice. Whether it be in education, expression, medicine or the spread of misinformation, industry professionals are recognizing that AI will soon be inextricably linked with society’s function.

Education

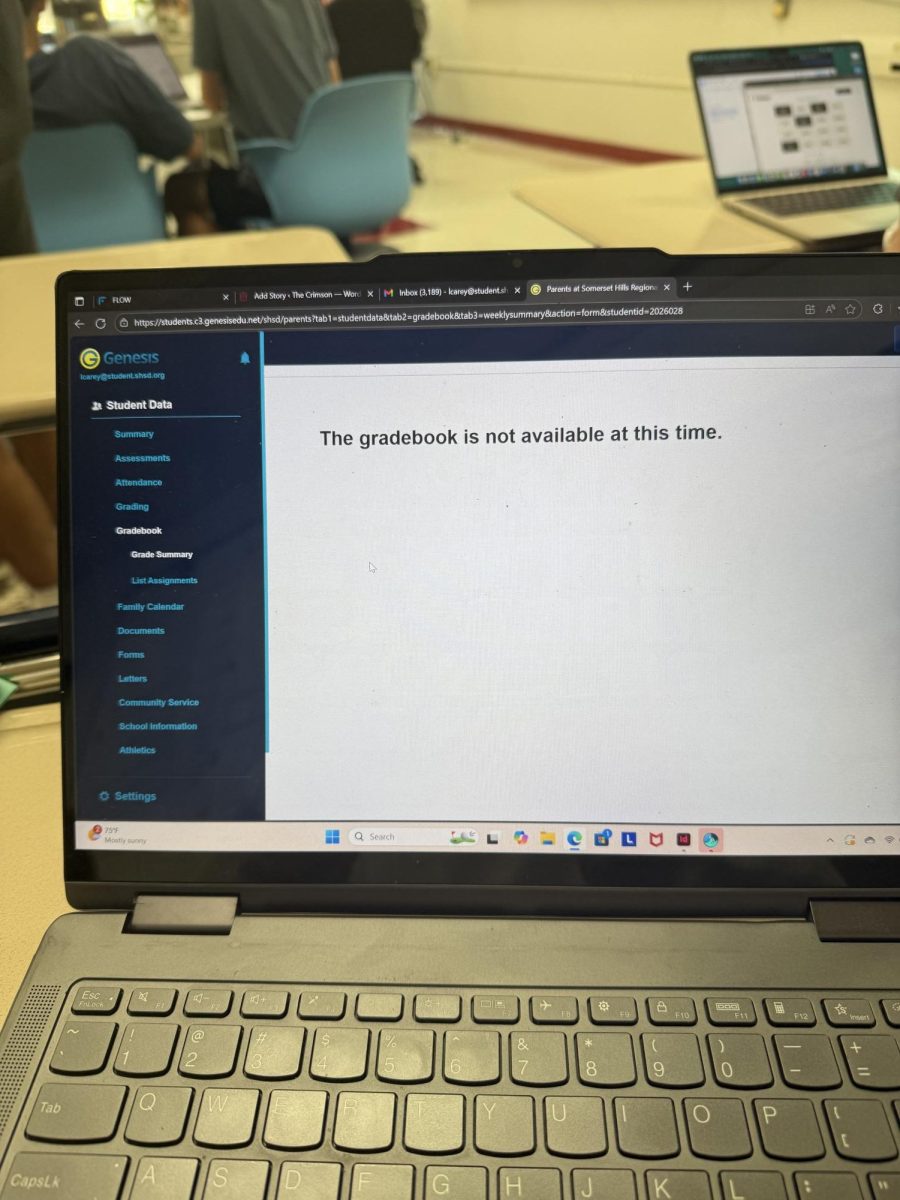

The academic world has been sent into upheaval due to generative AI such as ChatGPT and Google Bard. In the wake of their introduction, administrators have been racing to keep curriculum and policy up to date.

“It’s going to have to change the way teachers prepare assignments,” instructional technology coordinator Adam Stirrat said. “We used to get by by just picking a topic and saying, ‘write a paper on it.’ Doing that now invites opportunities for kids to use AI to write that paper. If you’re a teacher, you need to focus on the process of [the assignment], breaking it down. The learning process has to be more valuable than just a grade or a transcript.”

Some teachers find AI usage to be helpful in terms of idea generation, examples and feedback.

“It’s helping me write assignments,” English teacher Jon Frank said. “It’s helping me come up with writing samples quickly that I can use in my instruction and it’s also helping me write rubrics and assignments and scoring guides.”

However, in some cases AI adds on to educator’s already tedious workloads.

“People are scared, rightfully, that it’s going to devalue the skill of writing and the skill of written communication,”Frank said. “It’s added a burden on educators, both in searching out and determining when AI has been used, and the extra work of educating students on how to use it responsibly.”

The novelty of AI has sparked conversation among both students and teachers. In fall of 2023, Sruti Sureshkumar (11), Michael Wise (11) and Noah Wolk (12) founded AI Club.

“The club’s goal is to foster a positive impact through projects, collaborations [and] initiatives,” club president Sureshkumar said. “I wanted to promote AI literacy in our school, as AI is becoming more integrated into our society.”

Much of the stigma surrounding AI in education stems from student abuse of technology. Others, though, are aware of the risks it poses.

“If you’re using this to cheat, in the long run that’s only going to hurt you,” Wise said. “Part of the philosophical concern about AI is that it allows someone to become thoughtless, because they can rely on AI. That could lead to this conceptual destruction of original thought, because AI is not really capable of it.”

Still, if properly integrated and regulated, the future of AI in education has the potential to be a net positive.

“AI has this opportunity to be the universal tutor for everybody here,” Stirrat said. “Ultimately, it’s not something that we’re trying to just catch kids doing and punish them. I’d like to see AI not be a taboo topic as teachers. The future is AI, and kids are going to be using AI just like they use Google right now.”

Expression

AI is often used in the pursuit of creating expressive media, from visual, to written, to auditory. Programs like Dall-E and Midjourney have made it so users can easily put in a request and receive a painting or piece of music. However, what may seem like wholesome fun has led to professional artists questioning whether or not they will be replaced by such technologies.

“One of my past students, Sam Tong, is a storyboard artist in California and he’s part of the animation guild,” fine arts teacher Patricia Chavez said. “He’s gone to Washington, D.C. with groups of people to try to educate lawmakers on the harms of AI art generators. I think a lot of professional artists are concerned about how AI can take jobs away or devalue skills that people take a whole lifetime to grow and develop.”

The rise of AI has sparked a heavily debated topic: the ethics of employing AI in the creation of expressive media. Corporations often utilize AI graphics due to the efficiency and speed with which it can be created. However, these businesses fail to take into account that AI programs must obtain these graphics from preexisting sources.

“With current AI art generators, you’re basically taking other people’s work and then mashing it up to create something new,” Chavez said. “That steals the ideas and the work of human artists. I think that that’s just unethical, and I worry about the loss of human creativity and human touch.”

While many worry that new AI tools will devalue human originality, musical composer Yueheng Wang (11) doesn’t necessarily believe this to be true.

“If you take something like autotune, it’s huge, everyone uses it, but it doesn’t necessarily take away from the people who can sing really well without it,” Wang said. “At that point, it boils down to the intrinsic value of human music and art versus what AI makes and whether audiences or people see [that value]. Some people will care and some people won’t. It just depends on their taste and why they’re listening.”

Others, such as writer Maddy Ta (10), view the expansion of AI as a tool, beneficial for artistic endeavors.

“I think that the creative aspect of writing will never be lost,” Ta said. “AI is an infinite trove of ideas. We can benefit from it and can definitely use it to push us more outside the box.”

Amidst the discourse surrounding the topic, Frank conveys his concerns about reliance on AI.

“We think in language. If we hand the writing over to technology, then I fear that we will also lose some of our intellect and ability to communicate,” Frank said. “That, ultimately, is a humanity issue. If we lose our ability to think and express our [thoughts] in language, I think that that makes us less human.”

Medicine

In a world of technological advancement, the medical community has taken strides to embrace the growth of AI.

“AI in medicine can have a whole number of potential benefits,” associate professor at Washington University School of Medicine John Schneider, M.D., said. “It can assist in so many different functions, particularly in patient care, and that’s something really exciting.”

Already, many medical professionals have incorporated AI into various aspects of the field, from facilitating patient communication to accumulating and organizing complex information and data sets. Eric Leuthardt, M.D. heads the Center for Innovation in Neuroscience and Technology at Washington University School of Medicine, where he has pioneered the use of AI in neuroscience.

“We created one of the early AI algorithms to do brain mapping,” Leuthardt said. “Historically, in order to remove a tumor you would have to do awake surgeries to know the critical areas of the brain so you could avoid them, which was a challenging task. So, we trained an AI algorithm so that it can automate the identification of these networks. This not only made the process much less invasive, but also cut the time it took from two days to fifteen minutes.”

In the realm of patient communication, AI has the potential to innovate patient-physician correspondence by making it a more streamlined process. The current system for medical inquiries is complex, often frustrating and time-consuming for both patients and physicians.

“We’re hoping to implement AI assistance in responding to patient queries,” regional chief medical information officer at SSM K. Michael Scharff, M.D. said. “Not all of our time as a doctor is taking care of a patient in front of us, in the operating room or delivering babies. We spend a lot of time churning through stuff in the [electronic health record], specifically patients asking these questions. That has a specific cognitive burden on physicians because they have to pause what they’re doing to focus on answering that message.”

Scharff hasn’t been the only one to recognize the conveniences that AI brings into the realm of patient-doctor connection. Schneider also shares in his approval of the integration of these innovations as helpful tools.

“We’ve seen how AI can lead to many positives in communication between patients and physicians,” Scheider said. “[AI] can keep better track of patients’ medical history, which can physicians with their own cognitive biases.”

The emergence of more advanced programs of artificial intelligence have met varied reactions, especially in the context of human focused fields, such as medicine. While many fear the growing involvement of AI in medicine, Leuthardt focuses on its potential to be harnessed to advance, and more importantly, improve medical care.

“If you look at the evolution of technology, there’s always this tension surrounding change, but change is happening,” Leuthardt said. “You either engage with it, grow with it and reap the benefits or you get marginalized. That [was] true when horses and buggies were around and people were making cars. It’s just a new level of adaptation that our human species needs to accomplish and will be better for.”

Misinformation

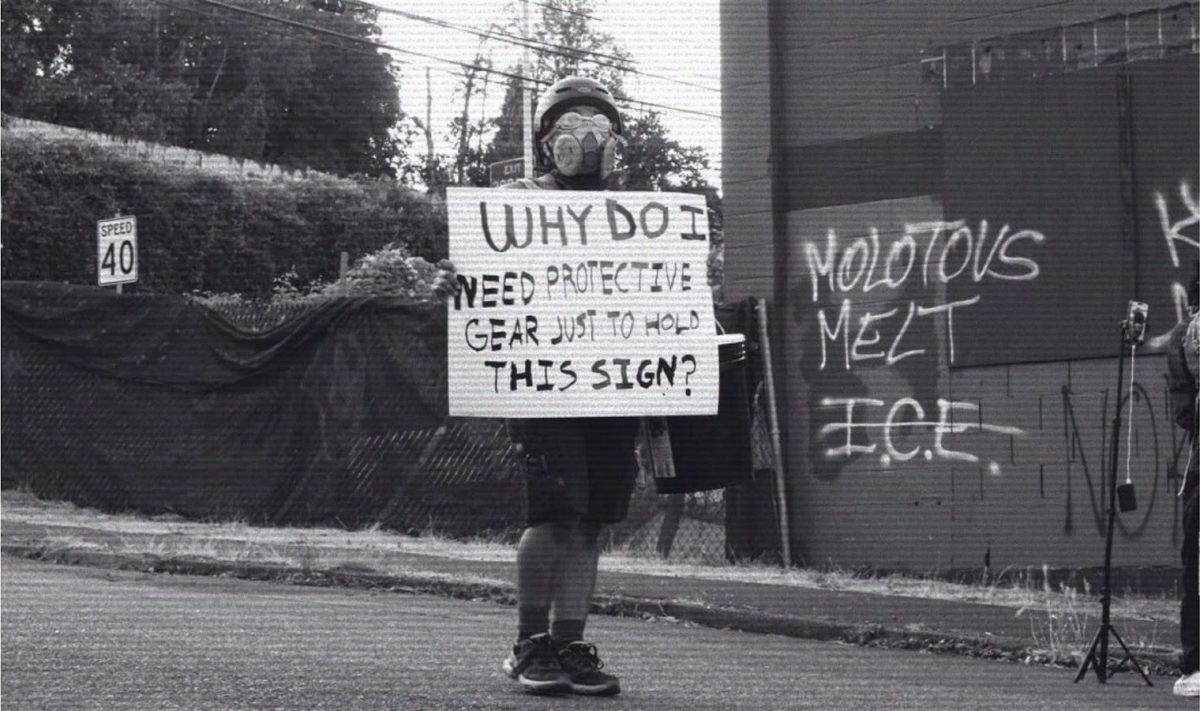

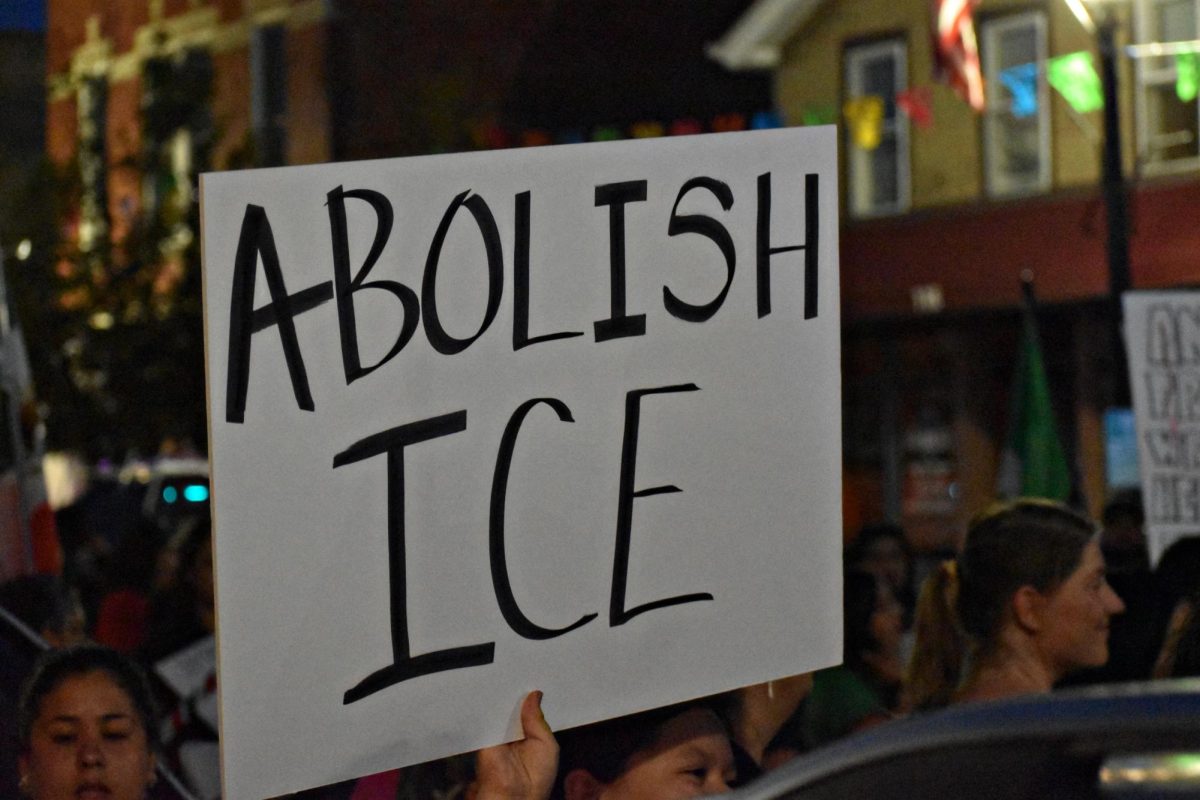

From deepfakes to mass produced propaganda, one of the greatest fears about AI is its potential for misinformation. Graphics programs, for example, can produce startlingly realistic images and videos that are becoming increasingly difficult to differentiate from source material.

“We are currently seeing the use of graphics programs to create images, for example, in the Israel-Palestine conflict to create fake images,” head robotics programmer Aiden Lambert (12) said. “There’s a demonstration I saw online, [where] you render a scene of a bombing. Then you scale the image down and it looks like [a photo of the real thing]. That can be used to misinform people.”

Other generative AIs such as ChatGPT process massive amounts of information and data from internet databases and offer it to the user in a condensed form. However, these materials aren’t always the most reliable.

“The information AI spits out is based on data that the people who train [the AI] barely have any time to validate because it’s millions of pages,” Wolk said.

The flood of information may make it difficult for preventative measures to be taken, especially as the ethics of AI takes a backseat in favor of expeditious technological advancements and developments.

“It’s like trying to drain the ocean with a little Dixie cup,” Stirrat said. “It’s a tsunami of influence. We can’t quite grasp some of its faults.”

Often, countering AI misinformation can feel like an uphill battle for activists and developers.

“It’s really hard, especially if the misinformation that’s being spread is what people want to hear,” Wise said. “[People are] obviously going to be more willing to believe [it] if it’s what they want to hear. Part of this shows a lack of regulation within AI itself. With OpenAI and ChatGPT, there’s a distinct lack of regulation.”

OpenAI, the company behind ChatGPT, has come under fire for their regulations on misinformation. In November 2023, CEO Sam Altman was ousted from his position due to concerns pertaining to his attitude towards ethics and safety. He returned a few days later and replaced the board members who expelled him. To many, this controversy revealed how susceptible to corruption these companies are.

“There will always be biases based on this capitalistic way,” Stirrat said. “You have to realize that [AI is] being run by companies, and companies are run by people. If that’s the case, then they’re always going to have an agenda they’re trying to sell.”

As preventative measures slow down, users are taking the fight against AI generated misinformation into their own hands.

“[Misinformation] is going to have to be something that’s addressed,” Wise said. “ People need to be on the lookout for it. The quicker that happens, the more AI literacy there will be, which will allow for better regulation, more understanding [and] less fear.”

This story was originally published on Panorama on January 24, 2024.