Paying with our privacy: How online services strip consumer rights

Big tech’s monopoly on data threatens our democracy

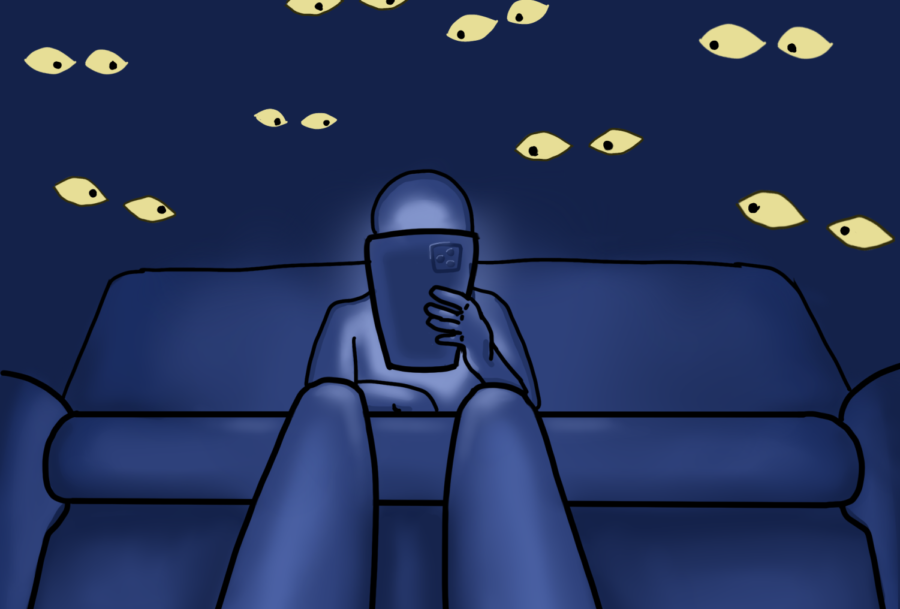

In today’s world, the vast majority of social media apps contain ways to monitor your every activity, keeping you on your screen to make profit. We must reconsider the compromise we’ve so easily made with corporations, of sacrificing our privacy for the conveniences of technology.

November 18, 2022

“I certify that I have read, understood, and agree to the terms and conditions.”

Accompanied by pages of legal jargon and abstruse wording, these certifications seem to serve no use for the ordinary user. Such documents are often time-consuming to peruse and difficult to comprehend, which results in most electing to skip over them and just check the box. Yet nefarious phrases lurk inside these agreements, forcing us to completely forfeit our data. With the quandary of wanting to use online services while also maintaining our privacy, the technologies of today have become increasingly nuanced, and sometimes even dangerous.

The terms and conditions of Silicon Valley’s largest tech giants

Living in the heart of Silicon Valley, we frequently imbibe the doctrine of tech optimism, glorious visions of a digitalized future brought about by technology. Yet the contrary is just as apt — unprecedented advancements have led to tech giants monopolizing the field, influencing our lives in subtle ways. Most of the content we consume is digital. But the connectivity we reap from this online ecosystem comes with a steep price: our data. Consenting without reading privacy notices, we sacrifice our data to corporations, unconditionally granting the rights to sell and distribute them. As these data reveal all aspects of our lives, many groups, including the American Civil Liberties Union, believe it is time to consider the impact of technology on society and whether we should accept the terms prescribed by online platforms.

For upper school computer science teacher Anu Datar, the greatest problem with current tech policy lies in the ambiguity surrounding how our data can be used. With no clear-cut regulation on privacy, companies are mostly free to use consumers’ data as they desire.

“There’s a lot of gray areas, there’s a lot of misuse of personal information,” Datar said. “Many times users click on ‘accept Terms and Conditions’ without really reading through them. That allows many of the app developers or even some third party software builders to misuse their personal information in ways that are actually pretty dangerous. That’s the biggest thing, people not realizing how the information that they’re putting out there can be used.”

Yet, we unconsciously give information about ourselves to tech companies all the time. To many, casually scrolling through Instagram feeds or watching TikTok videos offers a comforting respite from the stress of everyday life. But even in these little moments, unseen algorithms run behind the scenes, busying themselves with tracking our data and preferences. At surface level, this consumer surveillance may seem innocuous: companies work for profit, and exchanging some privacy for the convenience and entertainment of social media is a trade many would make. However, as companies sell and disseminate our data, such a compromise only reveals the scope of the privacy we lose when we understand how unrelated third parties will use it.

“Sometimes the information usage is acceptable; they show me targeted ads and I’m sort of okay with that because it doesn’t really hurt or harm me,” Datar said. “But then there are other things — it’s tracking my location, it’s also aware of when I am away from home, my patterns. If my data or my information about everything, like my spending habits [or] my eating, [is being tracked], then I’m vulnerable to being attacked in a bunch of different ways.”

Social media’s descent into polarization

In the quest for profit, corporations have not just cajoled us into sacrificing our privacy — they have also increasingly polarized public opinion. Social media has become one of the most popular outlets for communication and transmitting information, resulting in unprecedented levels of control by a few select companies.

As of 2022, the average person on the internet spends 147 minutes per day on social media, the highest amount ever recorded. Though these platforms may seem to promote fair and inclusive dialogues, they are not public forums, designed solely for the free discussion of users; more accurately, they are tools run by for-profit corporations. To maximize such profit through increased user interaction, rather than displaying a diversity of viewpoints, they promote increasingly extreme content for clicks and views at the cost of the integrity of online communication. Since controversy fuels user engagement which leads to profit, these companies have no financial incentive to facilitate dialogue from a diverse subset of individuals, exacerbating the issue of polarization.

This phenomenon of surrounding users with only like-minded ideas is known as an echo chamber, dubbed after the echoing of ideas that inundate someone’s social media feed. Ethics and Philosophy Club Vice President Kabir Ramzan (11) emphasizes the issue of these systems.

“You’re surrounded with opinions that kind of reinforce your own beliefs, and you’re surrounded by content that you want to see more of,” Kabir said. “It grows the problem because now you’re not having conversations with another perspective and you’re just validating your own [opinions] even more. That can lead to really dangerous outcomes where you get focused on one belief, and maybe it’s a belief that’s just wrong, [but] everyone keeps agreeing with you.”

The issue arises when people with different opinions interact. Constantly consuming similar content results in greater reinforcement of one’s beliefs, regardless of how misguided they may be. Such validation transforms into polarization, with individuals being led into increasingly more extreme content that aligns with their own opinions. Occasionally, this polarization can spark greater problems, resulting in hateful speech and blatant misinformation. Social media companies employ content moderation to solve this problem, removing comments as they see fit. Generally, this power is beneficial, allowing for the removal of hate while ensuring the platform fosters a healthy environment. For instance, Twitter has removed tweets that spread false or misleading information about COVID-19 vaccines. Because free speech is unprotected in this digital environment, companies are able to take these measures. But such moderation can just as easily be used to censor everyday civilians.

“All the social media companies are private organizations that [work] for profit [and are] their own companies,” Kabir said.“They have their own internal rules, and they don’t have to follow certain laws that [apply to a] public forum. Free speech on social media is not a thing, because that’s only applied in a public setting. And then the social media company is a private corporation, and can regulate and moderate whatever they want on that platform.”

Content moderation in private for-profit companies

Moreover, content moderation presents many technical and social issues. The two main avenues for such moderation are algorithmic and manual. Given the recent advancements in AI algorithms that have accompanied the rise of social media, many large social media platforms have begun relying on these automated methods to moderate content automatically. Though efficient, such algorithms still frequently make mistakes, occasionally allowing harmful content to slip through and censoring all comments about controversial topics altogether. With recent studies showing that social media platforms amplify misinformation over the truth, these small blips may have tremendous impacts. On the other hand, manual moderation remains extremely time-consuming while also taking a psychological toll on the human moderators, who are inundated with harmful content daily. With content algorithms pushing increasingly inflammatory messages, moderation is more necessary than ever, countering the issues of the polarized views social media fosters.

In light of all these issues in the tech field, one company which has increased focus on developing ethical technology is OpenAI, an AI research and development company. OpenAI Member of Technical Staff Valerie Balcom explains that the need for moderation stems from an innate mindset of big tech, where companies optimize for specific metrics to simplify an overarching problem.

“Speed and efficiency are the biggest challenges in emerging technologies; everything is always getting faster and smaller,” Balcom said. “If you’re targeting the wrong metric, you can end up with unexpected side effects and terrible outcomes. Unfortunately, [companies] are not incentivized to make safe incentives or metrics.”

In 2016, Facebook Vice President of Ads and Business Platform YouTube executives have previously mentioned that they equate watch time with overall user happiness, which directs their focus primarily on increasing the metric of watch time. While such assumptions may simplify the entire situation, it also poses the risk of creating unintended consequences. For instance, social media platforms’ pursuit in maximizing engagement has increased polarization and exacerbated political divisions. This quandary of choosing the right metrics is yet another roadblock in our journey to a more sustainable tech environment, one where privacy and truth are valued more than profit.

We have seen the fragility of our country’s democracy and the increasing divisiveness among citizens. Social media has only served to exacerbate these longstanding issues, conflicted between the interests of a private for-profit company and of an equitable forum for public exchange of ideas. As these platforms have zeroed in on making a profit, they push increasingly radical content towards users, seeking to further interaction with the platform and leaving behind the unintended consequences of a more polarized society.

As we proceed into this uncharted territory of technology, we have essentially given the tech giants that loom large over the field control of our lives, and their algorithms have fed us harmful content in hopes of greater profits. We know such a system is unsustainable, we know the system must change. But for us to enact the proper changes and to protect our values of free speech and democracy, technologists assert that we must reconsider the compromise we’ve so easily made with corporations, sacrificing our privacy for the conveniences of technology and too easily consuming conversations orchestrated in the companies’ interests rather than one we build as active participants.

This story was originally published on Harker Aquila on November 12, 2022.

![With the AISD rank and GPA discrepancies, some students had significant changes to their stats. College and career counselor Camille Nix worked with students to appeal their college decisions if they got rejected from schools depending on their previous stats before getting updated. Students worked with Nix to update schools on their new stats in order to fully get their appropriate decisions. “Those who already were accepted [won’t be affected], but it could factor in if a student appeals their initial decision,” Principal Andy Baxa said.](https://bestofsno.com/wp-content/uploads/2024/05/53674616658_18d367e00f_o-1200x676.jpg)

![Junior Mia Milicevic practices her forehand at tennis practice with the WJ girls tennis team. “Sometimes I don’t like [tennis] because you’re alone but most of the time, I do like it for that reason because it really is just you out there. I do experience being part of a team at WJ but in tournaments and when I’m playing outside of school, I like that rush when I win a point because I did it all by myself, Milicevic said. (Courtesy Mia Milicevic)](https://bestofsno.com/wp-content/uploads/2024/06/c54807e1-6ab6-4b0b-9c65-bfa256bc7587.jpg)

![The Jaguar student section sits down while the girls basketball team plays in the Great Eight game at the Denver Coliseum against Valor Christian High School Feb. 29. Many students who participated in the boys basketball student section prior to the girls basketball game left before half-time. I think it [the student section] plays a huge role because we actually had a decent crowd at a ranch game. I think that was the only time we had like a student section. And the energy was just awesome, varsity pointing and shooting guard Brooke Harding ‘25 said. I dont expect much from them [the Golden Boys] at all. But the fact that they left at the Elite Eight game when they were already there is honestly mind blowing to me.](https://bestofsno.com/wp-content/uploads/2024/05/IMG_7517-e1716250578550-900x1200.jpeg)

![BACKGROUND IN THE BUSINESS: Dressed by junior designer Kaitlyn Gerrie, senior Chamila Muñoz took to the “Dreamland” runway this past weekend. While it was her first time participating in the McCallum fashion show, Muñoz isn’t new to the modeling world.

I modeled here and there when I was a lot younger, maybe five or six [years old] for some jewelry brands and small businesses, but not much in recent years,” Muñoz said.

Muñoz had hoped to participate in last year’s show but couldn’t due to scheduling conflicts. For her senior year, though, she couldn’t let the opportunity pass her by.

“It’s [modeling] something I haven’t done in a while so I was excited to step out of my comfort zone in a way,” Muñoz said. “I always love trying new things and being able to show off designs of my schoolmates is such an honor.”

The preparation process for the show was hectic, leaving the final reveal of Gerrie’s design until days before the show, but the moment Muñoz tried on the outfit, all the stress for both designer and model melted away.

“I didn’t get to try on my outfit until the day before, but the look on Kaitlyn’s face when she saw what she had worked so hard to make actually on a model was just so special,” Muñoz said. “I know it meant so much to her. But then she handed me a blindfold and told me I’d be walking with it on, so that was pretty wild.”

Caption by Francie Wilhelm.](https://bestofsno.com/wp-content/uploads/2024/05/53535098892_130167352f_o-1200x800.jpg)

![The Jaguar student section sits down while the girls basketball team plays in the Great Eight game at the Denver Coliseum against Valor Christian High School Feb. 29. Many students who participated in the boys basketball student section prior to the girls basketball game left before half-time. I think it [the student section] plays a huge role because we actually had a decent crowd at a ranch game. I think that was the only time we had like a student section. And the energy was just awesome, varsity pointing and shooting guard Brooke Harding ‘25 said. I dont expect much from them [the Golden Boys] at all. But the fact that they left at the Elite Eight game when they were already there is honestly mind blowing to me.](https://bestofsno.com/wp-content/uploads/2024/05/IMG_7517-e1716250578550-450x600.jpeg)